I start this article (which I will divide into multiple parts) on the day of the Immaculate Conception: December 8th. I want to talk about the current state of artificial intelligence to assist with low-budget filmmaking. It will require some time, especially to try out various services, as I don’t want to make the usual sterile list. Also, because it will help us produce content for the films we will make in the near future.

I got the idea, albeit unintentionally, from Nicolas Perrier from the University of Lyon in France, with one of his posts on LinkedIn. Perrier is a skilled expert in innovation in augmented and virtual reality, and the post in question is about Plask; one of the many tools for creating 3D animations starting from a simple video. In practice, it’s Motion Capture without expensive and complex equipment. This technology may be of particular interest to us to produce animated videos at a fraction of the current cost, and even with actors remotely.

I’m also getting additional help, not just from Nicolas himself with his countless posts, but also from the website Futurepedia.io. It’s an “AI wiki”, featuring a selection of many tools currently available to the general public.

Let’s analyze some of these tools, specifically the ones that are useful in filmmaking. Both for writing and for technical production of videos, as well as for voices. We’ll evaluate the quality of the results, conduct experiments, and learn about their costs.

To better understand how to use them in our low-budget films, I decided to create a short film (with very low expectations, just for technical experimentation) using them as much as possible.

Table of Contents

Making videos with artificial intelligence.

Let’s divide the services into three main categories: writing, video, and audio. Starting with the writing, having to have the idea first.

Film writing with artificial intelligence

We need a story. Created by an AI? Let’s see, writing tools are not lacking. And if you don’t agree, you can always argue in the comments.

How does GPT-3 work?

Most public AI writing services are currently based on GPT-3, which has 175 billion machine learning parameters. The alternatives are actually many: BigScience Bloom, a large-scale language that has recently been launched (with the advantage of being open source), or the German Aleph Alpha with its Luminous (with 200 billion parameters).

What are the parameters of an artificial intelligence?

Imagine having a task that requires predicting whether an image contains a cat or not. A machine learning model could be trained on many images labeled as “cat” or “not cat” to learn to recognize the distinctive features of cats.

To do this, the model uses a neural network, which is a set of interconnected nodes. Each node represents a computation performed on the input data. The parameters, or “weights,” are the values assigned to each node that affect the strength of the connection between nodes.

When the model sees a new image, these weights are used to perform a series of calculations that ultimately produce a prediction, such as “cat” or “not cat”. The number of weights used in the model is the number of parameters.

In summary, parameters are like “ajuste values” that influence how a machine learning model processes data to make a prediction, and are modified during training to improve the model’s performance.

A model with many parameters will have more opportunities to adjust its calculations to fit the training data, but it may also be more prone to overfitting, meaning an excessive adherence to the training data and a poor ability to generalize.

Other competitors of ChatGPT.

Other competitors are Nvidia with the Megatron NGL (huge, but not available to the public ), Google with their GLaM, LaMDA and PaLM (yes, they don’t leave anything out), OPT by Meta, AlexaTM by Amazon or various Chinese ones like Alibaba and its BaGuaLu.

To conclude, I mention GPT-J, another open source alternative to GPT-3.

Let’s quickly understand how GPT-3 works, using a rephrased response from the AI itself:

GPT-3 (Generative Pretrained Transformer 3) is a state-of-the-art language model developed by OpenAI. Utilizes a deep learning technique called “transformers“to generate human-like text based on a given input.

Here’s how GPT-3 works:

- The model is trained on a large set of texts generated by humans, such as books, articles, and websites. This training process allows the model to learn patterns and structures of natural language.

- When it receives an input, the model processes it using its layers of neural networks. When it receives an input, the model processes it using its layers of neural networks. Each layer performs a different task, such as analyzing the syntax, semantics, and context of the input.

- The model then generates a response based on the input and its understanding of natural language. It does so by predicting the next words in the sentence, using the patterns and structures learned during training.

- The model repeats this process several times, generating multiple responses to the input. These responses are then ranked and evaluated by the model, and the highest-scoring response is selected as the final output.

GPT-3 is known for its ability to generate text that is often difficult to distinguish from text written by a human being. It is used in various applications, such as language translation, text summarization, and text generation.

ChatGPT can tell stories for screenplay.

Let’s start directly with OpenAI’s product: ChatGPT. It is perhaps the tool of the moment; the first one to have made a leap towards the general public. It’s almost concerning how accurate and “real” it can be in conversations, but it has also been discussed too much and everywhere in the last few weeks.

I signed up for the OpenAI website and opened the chat to try talking to GPT-3 (I can’t imagine what GPT-4 will do next) by asking it to write me a story with some dialogues for a short digital graphic film. And well, it simply made it for me.

The story written by ChatGPT

This is the story as written by ChatGPT, with no modifications, translated into Italian (you can read the original in the English version of this article).

As the sun rose on the horizon, a small group of adventurers set out to find the lost city of gold. For months, they had been searching, following old maps and ancient legends in the hopes of striking it rich.

“Are you sure this is the right way?” asked Maria, the leader of the group. “I don’t see any sign of the city on the horizon”.

“Trust me, Maria,” replied Jake, the group’s ancient history expert. “The city is hidden in the depths of the jungle and it won’t be easy to find. But if we stick to the plan, we will find it”.

The group ventured into the dense jungle, hacking through the vines and undergrowth with their machetes. After several hours of grueling travel, they reached the edge of a clearing.

“Look!” Sarah, the youngest member of the group, shouted. “Down there! I see something shining in the sunlight!”.

The group rushed to the spot where Sarah had seen the glimmer, and sure enough, they found a small golden statue buried in the ground.

“This must be it!” exclaimed Maria. “We’ve found the lost city of gold!”.

The group started to dig, uncovering more and more treasures. They found gold coins, precious jewels, and ancient artifacts of great value.

“We’re rich!” exclaimed Jake, laughing with excitement. “We’ve found a treasure beyond our wildest dreams!”

But as they continued to dig, the ground beneath their feet began to rumble and shake. Suddenly, a huge stone door opened, revealing a hidden entrance to the lost city.

“Quick, everyone inside!” shouted Maria, leading the group into the dark tunnel. “We must find the source of the treasure before it is too late!”

The group went into the tunnel, lit by flashlights. They reached a large open chamber filled with treasures.

“Here we go!” shouted Sarah. “The Lost City of Gold!”

But as they explored the chamber, they heard a loud rumble. Suddenly, the ground beneath their feet gave way and they fell into a deep pit.

“Help!” cried Maria, as they fell into darkness. “We are trapped!”

But just when they thought all was lost, they heard a faint voice calling to them.

“Don’t worry, adventurers,” said the voice. “I am the guardian of the lost city and I will help you escape.”

With the help of the watchman, the group managed to get out of the pit and back to the surface. They came out of the jungle, triumphant and rich beyond expectation.

“We did it!” cried Jake, laughing with joy. “We have found the lost city of gold!”

Let us consider this first story. Of course, he didn’t make it easy for me…. He could create a story in the middle of a random city; in contrast, the jungle will be more complex to recreate. But I have to be in it, that’s the game.

The same story, in a short version

Oh, I also asked for a shorter version. He gave me a summary. Then I specified that I wanted a short version but with dialogue, and he simply did it. I am reporting it to you for completeness:

As the group of adventurers searched for the lost city of gold, they came across a hidden entrance in the jungle.

“Look, over there!” said Sarah, pointing to the entrance.

The group rushed to the entrance and explored the city, finding treasure beyond their wildest dreams.

“We are rich!” shouted Jake.

But as they continued to search, the ground beneath them gave way and they fell into a pit.

“Help!” cried Maria.

A city watchman helped them escape and they emerged victorious and wealthy.

“We made it!” cried Jake.

ChatGPT automatically writes the script

I was considering collecting it into a screenplay, when I thought, “Why not ask him?” Said and done. He rewrote the story for me already ready for film:

INT. JUNGLE – DAY

The sun rises on the horizon as a group of adventurers, MARIA, JAKE and SARAH, set out to find the lost city of gold.

MARIA

Are you sure this is the right way? Non vedo alcun segno della città all’orizzonte.

JAKE

Trust me, Maria. La città è nascosta nel profondo della giungla e non sarà facile trovarla. But if we stick to the plan, we will find it.

The group goes into the dense jungle, cutting through the lianas and undergrowth with their machetes.

SARAH

Look! There! I see something shimmering in the sunlight!

The group rushes to the spot where Sarah saw the glitter and finds a small golden statue buried in the earth.

MARIA

This must be it! We have found the lost city of gold!

The group starts digging, discovering more and more treasures.

JAKE

We are rich! We have found a treasure beyond our wildest dreams!

But as they continue to dig, the ground beneath their feet begins to rumble and shake. Suddenly, a huge stone door opens, revealing a hidden entrance to the lost city.

MARIA

Quick, everyone inside! We must find the source of the treasure before it is too late!

The group enters the tunnel, lit by flashlights. They reach a large open chamber filled with treasures.

SARAH

That’s it! The lost city of gold!

But as they explore the chamber, they hear a loud rumble. Suddenly, the ground beneath their feet gives way and they fall into a deep pit.

MARIA

Help! We are in a trap!

But just when they think all is lost, they hear a faint voice calling to them.

GUARD (off-camera)

Don’t worry, adventurers. I am the guardian of the lost city and I will help you escape.

With the help of the watchman, the group manages to get out of the pit and back to the surface. They re-emerge from the jungle, triumphant and rich beyond expectation.

JAKE

We made it! We have found the lost city of gold!

Apart from Inside Jungle, it is otherwise a good starting point. But some form errors jumped out at Andrea Rocci, an “human” screenwriter, albeit without an in-depth analysis (we were chatting over a beer).

In fact, the script speaks in pictures, and phrases such as “discovering more and more treasures,” or “rich beyond expectation” are not at all clear. Which treasures? Statues, coins, anything else? And, what do you mean by rich? Are they filled with gold on them? Are they dressed flamboyantly? Everyone with the latest iPhone and the keys to a Ferrari?

Not to mention the lack of descriptions of environments. The jungle itself is left to the fullest imagination of the director, or set designers (or 3D artists, whatever).

However, we must make a virtue of necessity; we will leave any choice at the discretion of the director (if he existed, at least…). We will try to find a good one on character.ai, perhaps. In fact, try it out and talk to artificial “characters.” Even Albert Einstein is there!

Prices

Here it’s simple: it doesn’t cost anything basically. A $20/month version is starting to be marketed in some countries, which removes some limitations (mainly due to the computing power needed to handle the millions of requests coming into OpenAI each day).

Alternatives to ChatGPT

At present GPT-3 is hard to beat… While waiting for the most emblazoned candidates to come out (Google Bard soon), I asked ChatGPT itself about its competitors. He pointed me to ScriptBuddy, WriterDuet and AI Screenwriter to begin with. Asking for more, Plotbot, Amazon Storywriter, and InkTip Script Listing. Okay, I thought that was enough… Except that the answer is actually a partial lie. Here we see the current limitations of this artificial intelligence, in part (but not only) due to the fact that the data it has is up to 2021.

Meanwhile, ScriptBuddy, originally based on GPT-2, has not been maintained for more than 3 years; we exclude it. WriterDuet, on the other hand, is most useful in script arrangement. But he doesn’t write anything himself… AI Screenwriter I don’t think even exists, at least dear old Google doesn’t give me any results.

As for Plotbot, or rather Plot-bot, it actually generates movie plots by exploiting the GPT-2 engine. But, like ScriptBuddy, it has not been maintained for years, and to optimize time, I rule it out. The same is true for Amazon StoryWriter, while InkTip Script Listing is not for generating text.

Dramatron

Dramatron is a new alternative, branded Deepmind, that promises exactly what we are looking for: writing screenplays for film and theater. Unfortunately, it was only released a couple of days around December 9, and by the time I got to try it, it was too late.

It remains an interesting project to keep in mind when it is opened. For the time being, George Mike, author of this YouTube video, does not like it.

His comment? “…overall, it seemed insipid to me. And it didn’t give me much more than the dialogue that you find in all the movies. He clearly grasped the genre, but did not explore it in an interesting way. It did not advance the narrative“.

Rytr

Through Google and Futurepedia I then came to Rytr. A nice tool. Account and login, and I find myself in the work area. He basically asks for language, style and use case of the created text, and he acts. Since there was clearly no “film script,” I tried meanwhile with a tool called a “magic wand.”

Same request made to ChatGPT, however, the response was almost hilarious:

In the name of Allah, the Compassionate, the Merciful

I believe

I believe in Allah, the One God

I believe in prophets and messengers

I believe in angels and scripture

I believe in the books of revelation

I believe in life after death and that on judgment day justice will be done.

- Sorry, what? You are saying “I believe” everything.

I don’t have time for that.

- I’m sorry, I don’t follow you.

- Why are you apologizing?

- What?

Let’s try something different and more specific… I asked “I need a script of about 2000 words, with dialogue, to make a CG short film. The title is: the lost city”, and set as a use case “story plot.” Prompt based on the previous ChatGPT output. But we are still a long way off, although we have a basic storyline:

In the near future, a crew of archaeologists and scientists on an exploratory mission to an unknown planet are captured and imprisoned by an ancient alien civilization.

The team is somewhat lost because of the language barrier and interactions with the aliens. They are kept in captivity for decades, exploring the ruins and discovering all the secrets of civilization.

They manage to return home, at the end. Scientists have learned many new things and are bringing them back to Earth to share with humans. However, they took so long that by now not only has the Earth changed, but there is no more time for these discoveries.

Same request, but with the magic command as the use case:

INT. LIVING ROOM – AFTERNOON

The room is well furnished and looks very comfortable. There is a door that leads to another room.

JOE: Good morning, John.

Morning, Joe. How are you this morning?

JOE: I’m fine, thank you for asking. Do you?

JOHN: I’m just fine! What’s on the agenda for today?

JOE: I’m going to catch up on my reading and then probably take a walk around the neighborhood. Do you have any programs

Okay, a useful tool for short texts but still far from total autonomy. We come to prices, although in our case I maintain ChatGPT’s text.

Prices

Rytr has a completely free version, limited to 10000 characters per month. Otherwise it costs $9 per month for 100,000 characters, or $29 per month for unlimited use.

Shortly AI

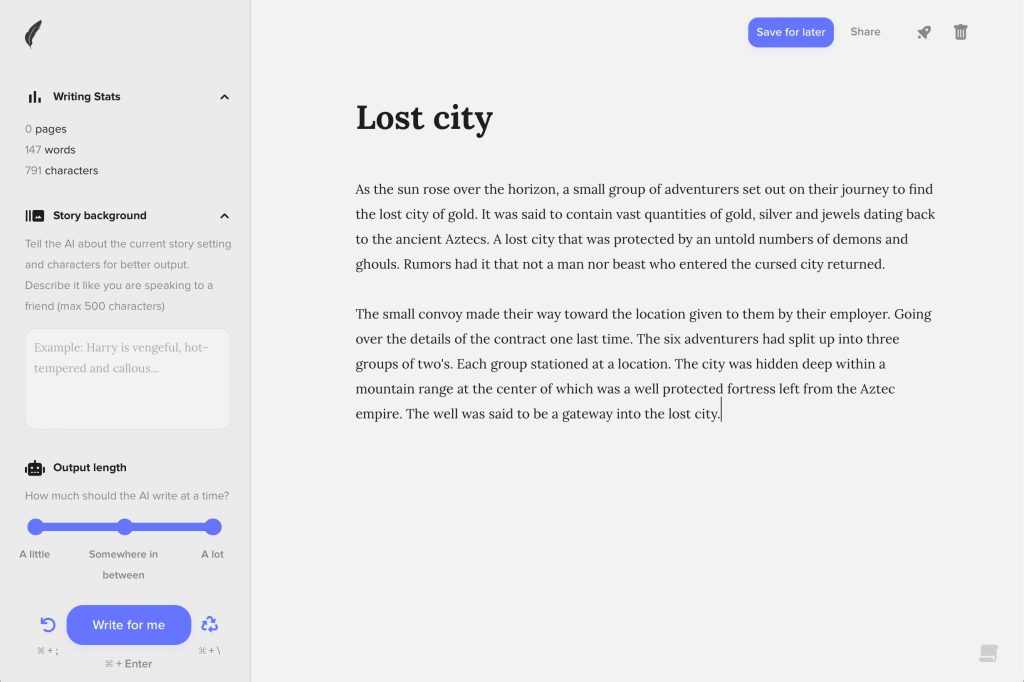

Another tool is Shortly AI, primarily designed to combat “writer’s block”. Write your text or a sentence, and he continues.

Since I could not ask him to do the story for me, I used the first sentence of the story generated by ChatGPT: Quando il sole sorse all’orizzonte, un piccolo gruppo di avventurieri si mise in viaggio per trovare la città d’oro perduta. Although based on the same language model, the continuation was clearly different.

This is the final text:

As the sun rose on the horizon, a small group of adventurers set out to find the lost city of gold. It was said that there were more than a thousand tons of gold in the lost city, but like all legends, the story of the treasure is told only to those who are worthy.

They had been on the road for two weeks and hoped to reach their destination by the end of the week. So far the road had been smooth, but when they approached a hill, there was a small construction on the road, which made the wagons slow down. The caravan had two large wagons carrying gold, while the rest were on horseback. The rest of the group was not much concerned about the delay, apart from the leader. To contain large amounts of gold, silver, and jewelry dating back to the ancient Aztecs. A lost city that was protected by untold numbers of demons and ghosts. It was said that not one man or beast who entered the cursed city returned.

The small convoy headed to the place indicated by the employer. They went over the details of the contract one last time. The six adventurers had divided into three groups of two. Each group had positioned itself in a location. The city was hidden in the depths of a mountain range at the center of which was a well-protected fortress left by the Aztec empire. The well was said to be a gateway to the lost city. Inside the fortress was to be a statue of an angel. It was a special stone tablet with an incantation that would concentrate the power of the jewel on the eighth son of an Aztec king.

Upon hearing of the strange fortress, the Aztec leader marched his army to attack it, but mysteriously one of the generals and his army were taken away to another kingdom and disappeared. The king then ordered the retreat. This was the beginning of problems. As they were leaving, they were attacked by large monsters that were thought to have been killed, but then were seen on top of the fortress. Battles continued throughout the following week, with the Aztecs defeated.

At the end, the king ordered the remaining troops to return to the city, saying that the previous week’s battles had awakened some ancient demons responsible for the disappearance of not only his son but also some of his generals and the remaining troops. The king had outlawed all kinds of magic and spells in his city, and everyone was to be executed. One young man was helped by his mother, also a sorceress, once she was discovered.

Ultimately, a good story but with little meaning. The AI took bits of text from here and there, but without connecting them in an “intelligent” way.

Prices

Shortly AI is certainly useful for adding a sentence or two and overcoming writer’s block, but to let it do its own thing does not seem the best tool.

Nor is its cost negligible. We are talking about $79 per month, or $780 per year. I still used the free trial, so it is only required to register an account.

WriterX

Let’s look at WriterX. It has templates for creating articles, social posts, text conclusions and many others. I expect similar results to Shortly, not being designed for whole stories.

Unfortunately, however, the free trial version does not allow access to all features, and I didn’t feel like paying for a service (even a fairly expensive one) without first trying it out; even having a free alternative that I am partially satisfied with.

Although I am evaluating it for its useful marketing features… Like the bio of my social channels, or any future help in writing blog articles. I also used it to write the meta description of this same article.

Prices

WriterX costs $29 a month in the standard version (basically the trial I had), or $59 a month to have unlimited text and functions. It is available in 25 languages.

Jasper AI

I also wanted to try Jasper AI, which is ultimately a graphical user interface for GPT-3 itself. It is perhaps the most publicized, found everywhere. But I simply haven’t even begun to use it: it forces you to enter your credit card even for the free version, and to verify it takes not a few cents but the entire first month: $29. Unprofessional attitude, so I don’t want to deal with them and wanted my money back immediately.

GPT-J and Writey AI

To get out of the GPT-3 universe, I wanted to try the open source GPT-J via the 6b.eleuther.ai website; however, it always crashed with the message, “Unable to connect to the model. Please try again”. And Writey AI, also well-functioning but too specialized in writing blog articles. Which I recommend you check it out for, if only because of 5 articles a month totally free.

Ultimately, I am tired and any further research seems futile. After all, ChatGPT’s text is valid (if you can call it an “automatic” text), so I would say let’s move on to the technical realization of the short film.

Creating 3D characters

Can an artificial intelligence generate 3D characters?

So we need the characters for our story, but is it really possible to generate them with A.I.? Spoiler: today, not well. There are many promises and some solutions that come close to the result, but it is not yet possible. Let us look at them in brief, as they will be useful in the near future. But then we will go on to figure out how to have the characters in our story now with little money.

PIFuHD

To start, there is PIFuHD, which is already available to the public and promises to create a 3D character from a single photo. It works, but even from the presentation videos, a quality far from acceptable in the cinema is remarked upon.

Google DreamFusion

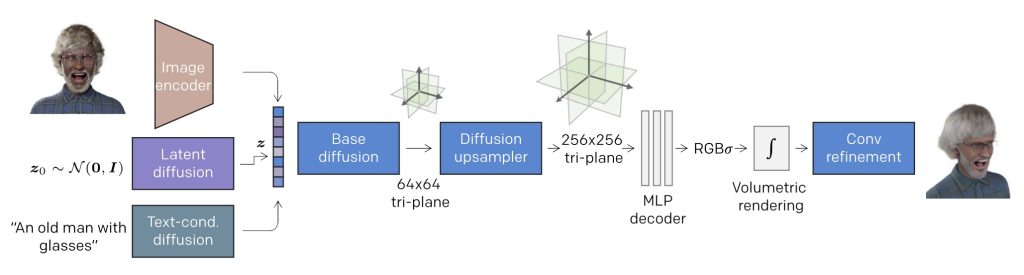

DreamFusion is one of the first A.I.s that can leverage 2D data from Stable Diffusion (the same data used to create photos using artificial intelligence, which is now widespread), to create 3D models.

Same problem as before: unsatisfactory quality even at first glance.

Microsoft Rodin Diffusion

A 2023 newcomer to Microsoft, Rodin Diffusion is not yet available to the public. It promises to create a realistic 3D avatar from a single photo. Well, judging from the photos released on their site the hair… Those are always the problem!

Nvidia Get3D

You certainly cannot miss the queen of graphics cards, Nvidia, among the 3D tools. And indeed its Get3D is superlative in creating 3D models from information learned from a dataset of 3D models.

The dataset is much more limited, and this is a disadvantage in the variety of 3D objects that can be realized. It is, however, open-source, a definite plus point, although the entire training must be done in one’s own system. This means high-end video cards galore… And they cost a little. Much.

Nvidia Magic3D

How did Nvidia solve the problem of the “limitation”, in terms of quantity, of objects that can be created by Get3D? As the article in The Decoder explains, simply copying Google… And trying to make its new Magic3D (researchers’ paper here) faster and more defined than DreamFusion. This video explains well how it works:

In practice, Get3D has a dataset based on other 3D models. Instead, Magic3D starts with images, themselves generated by A.I., paving the way for virtually infinite combinations.

Does it work well? What is certain is that it is not available to the public, but from the videos and examples on the Web it looks like a very promising technology. Although it still does not reach the necessary quality.

StyleGAN-NADA

For doing Pokemon or other fun things there is StyleGAN-NADA, trained following OpenAI’s CLIP (Contrastive Language-Image Pre-Training) model. It allows you to create images from just a textual description, without the need to see any references and without the need to collect additional training data.

It is also possible to modify existing images to make them similar to those in other domains, for example, using an image of a dog to generate a cat. The same approach can be applied to other generative architectures, opening up interesting possibilities for creating images quickly and accurately.

Pollinations

Among the “next steps,” Pollinations promises to do what we need it to do. From their website, “at the research level, our team is developing technology that allows people to generate 3D objects and avatars with the help of text alone”.

Here again, there is a wait. For now, it still allows you to do interesting things in the photo/video area. Maybe try it, however there is little of use for the purposes of this article.

Text2mesh

Small but interesting, Text2mesh is less of an exercise in style than its predecessors. Here you already have to have the model, but the AI promises to modify it independently; for example, increasing the number of polygons, changing its shape and color even creating the texture from scratch. All based on a text prompt, a written request.

Reminder to put in the diary in case we need it.

Luma AI

Luma AI is an interesting project to scan real objects by recreating them in 3D. The operation is interesting, and the quality of the scans is reasonably good. For props or figures in the background, I consider it more than acceptable, even in production.

It has also recently allowed you to create objects, and thus characters, in 3D from a text prompt. With the classic “imagine” command, already made famous by the MidJourney image generator. But here, again, the quality is not sublime. Good experiments, but definitely not usable for production purposes.

3D characters and objects without artificial intelligence

From all of this we understood only one thing: A.I. as of today, January 2023, still does not allow us to have good 3D models. Since we have to keep the budget low, however, let’s achieve them with the tools already available.

3D characters in our short film

I won’t go into a lot of research here, but I evaluate two 3D character creation tools that I already know: the simple Reallusion Character Creator, and Epic’s fantastic MetaHuman.

We will need 3 characters for our story: Maria, Jake, and Sarah.

MetaHuman Creator

Actually, in the case of MetaHuman there is a change from my past: I used the beta of MetaHuman Creator. It is phenomenal in that it has given me the ability to take advantage of the computing power of Epic’s servers by creating characters in a work break directly with my laptop (which only needs to receive a video stream).

So by requesting “Early Access” with one’s Epic Games account from metahuman.unrealengine.com, we end up with a choice of possible characters.

We imagine that all three are between the ages of 20 and 40, otherwise the script does not tell us much about them.

Jake

I choose to begin by selecting Aoi, as Jake. I don’t know, that beard gives me the idea of “adventurer.”

The software warns that some elements of the character has elements still under development (specifically hair) and therefore only LOD (level of detail) 0 (automatic) and 1 (highest quality) will be displayed. For us it is fine, the destination will be a pre-rendered video clearly at the highest quality and not a real-time video game.

A few changes to the character (shirtless, eye color, more “suitable” shoes and pants), and he is saved. Then we will export it with the Quixel Bridge plugin of Unreal Engine 5.

Maria

Let’s move on to Maria. I asked ChatGPT to come up with its characteristics, and the answer was that it could be a woman around 30 years old. Brown hair, shoulder-length and pulled back into a ponytail. Brown eyes, intense and deep, and of Latin ethnicity, with skin tanned from his outdoor adventures.

Let’s try to realize it. Let’s start from Roux. Let’s make a Blend with Lena, Kendra and Tori who seem suitable to modify her features and thus ethnicity a bit, give her a ponytail, brown eyes, modify the texture of her skin to give her a few more years, eliminate the make-up she would hardly have in the middle of the jungle, modify her clothing and that’s it.

Sarah

Finally Sarah. For ChatGPT he is about 25 years old, with short, wavy blond hair. Blue eyes, lively and inquisitive, around 1.70 meters, slender and muscular indicating an active and sporty person and of Northern European descent, with fair and delicate skin.

We rely on Vivian, various modifications until we make it something like the required. Clearly MetaHuman has many limitations, even more so in this online version. For example, on the body we have practically no possibility to operate, so “muscular” is a feature we will have to renounce, unless we model later. But, first I am not a 3D modeler; and this is a zero-budget project for educational purposes only. Also, for the same reason, it is not the case to waste more time on it than necessary.

Finally let’s leave them there; we’ll create the animations with mannequins and then we’ll retarget with MetaHuman characters directly in Unreal Engine 5.

Conclusions

Let’s limit ourselves here for today; in one of the next articles I will talk specifically about animation and Motion Capture with artificial intelligence (where it will be most useful to us), and then we will continue with environments, voices, music, and whatever else we need to finalize our little project.

I will give myself time to finish slowly, and possibly do other articles before continuing this one. For two reasons: they are elaborate operations, and artificial intelligence is in an explosive phase. An article written today may be old tomorrow. Maybe tonight.

Therefore, since we will need this information much more toward the end of this year for the actual production of a fulldome story, let us keep in mind all the news in the coming months.

As always, thank you for following me, and a hug.

2 Comments

Hello! I am

Anna Dmitrieva,

a director of the movies from Israel, now I am in Austin Texas. I have a skript for a feature film about Holocaust. I want to know: how to make a budget of the movie by AI? Can you help me please? Thank you, sincerely yours Anna Dmitrieva

Hi Anna! Nice to meet you. I hadn’t even thought of using AI for script breakdown and budgeting, your comment has made me aware of a great idea! I haven’t tried them, but tools like FilmuStage and Saturation.io seem interesting. I’ll definitely try them as soon as we have the complete script, I might even write an article about it. But it still takes some time.